Feature selection can be an important part of the machine learning process as it has the ability to greatly improve the performance of our models. While it might seem intuitive to provide your model with all of the information you have with the thinking that the more data you provide the better it can learn and generalize, it's important to also recognize that the data necessary to train a model grows exponentially with the number of features we use to train the model. This is commonly referred to as the curse of dimensionality. There used to be a great interactive demonstration of this concept, but it looks like the demo is no longer being hosted. Maybe at some point I'll code one up in d3.js and share it here; until then, check out this video if you're not familiar with the concept.

Ostensibly, some of the features in our dataset may be more important than others - some features might even be entirely irrelevant. But how do we go about systematically choosing the best features to use in our model?

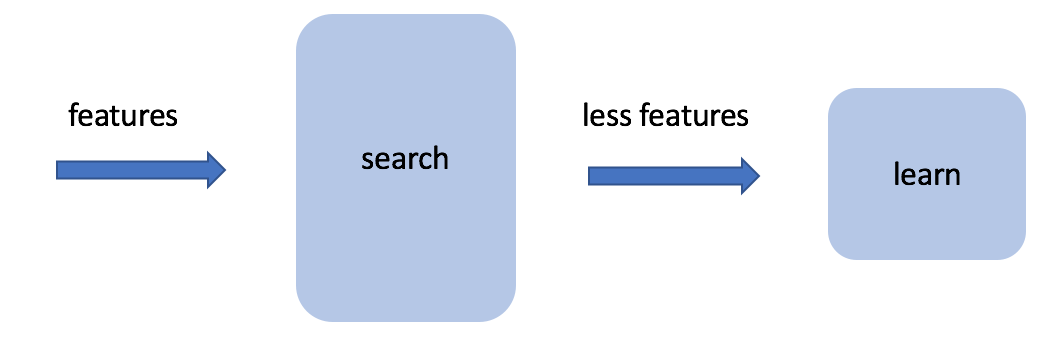

There's two general ways one can approach the problem of selecting features. The first approach, known as filtering, will look at your feature space and select out the best features before we actually feed the data into our model such that the data we train on is a reduced size from our original dataset. We'll define some metric, such as information gain, to evaluate the features.

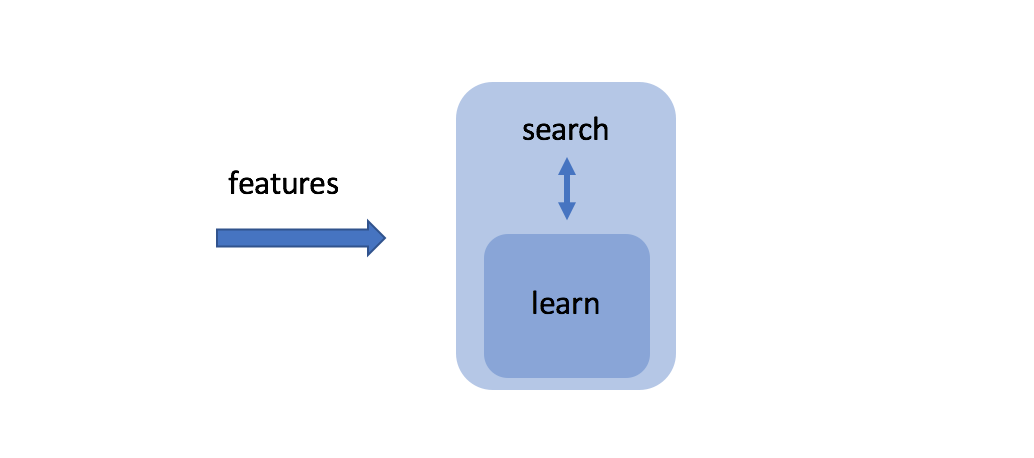

The other approach, known as wrapping, is to simply try out a number of reduced feature-spaces on our model and see what performs the best. In this approach, we'll use all of the features in our search for the optimal selection, combined with performance metrics from the model evaluation to select the best features. While this approach is much slower, we get to actually use the model to aid our feature selection and the result is typically better than filtering.

Ultimately, feature selection is a balance between reducing the amount of data needed to train a model without sacrificing the learning ability of a given model. If you reduce the feature-space too much, it makes it harder to learn, but if you don't reduce the feature-space at all, you may need an absurd amount of data to properly train the model.

Filtering examples

One simple filtering approach is to look at the variance across values for each feature. Features which have very little variance (in other words, features which are largely the same for most observations) will not prove to be helpful during learning, so we can simply ignore the features with a variance below some specified threshold.

from sklearn.feature_selection import VarianceThreshold

selector = VarianceThreshold(threshold= 0.2)

X_reduced = selector.fit_transform(X)

Another filtering approach is to train the dataset on a simple model, such as a decision tree, and then use the ranked feature importances to select the features you'd like to use in your desired machine learning model. You could use this technique to reduce the feature-space before training a more complex model, such as a neural network, which would take longer to train.

from sklearn.ensemble import ExtraTreesClassifier

from sklearn.feature_selection import SelectFromModel

simple_model = ExtraTreesClassifier()

simple_model = simple_model.fit(X, y)

selector = SelectFromModel(simple_model, prefit=True)

X_reduced = selector.transform(X)

Note: ExtraTreesClassifier is a randomized decision tree classifier which samples a random subset of the feature-space when deciding where to make the next split.

Lastly, you could look at each feature individually and perform statistical tests to gauge its relative importance in predicting the output. Sklearn provides selectors to do this by keeping the top $k$ features (where $k$ is defined by the user) with SelectKBest or keeping the top $x%$ of features (where $x$ is defined by the user) with SelectPercentile.

Wrapping examples

When using a wrapping technique, we'll actually use a performance metric from the desired model to aid our feature selection; for example, we could look at the error of a validation set.

There's essentially two places you can start, with all of the features present or none of them. In a forward search, we'll essentially start with nothing and, one by one, add the feature which yields the greatest drop in validation error. We'd continue this process until the incremental gain in model performance drops below a certain threshold.

In a backward search, we'll start with all of the features present in our model and, one by one, eliminate the feature which provides the least amount of detriment to our model performance. We'd continue this process until the detriment to model performance crosses above a certain threshold.

It's also possible to perform a bidirectional search, using a combination of forward and backward search which can take into account the effect of combinations of features on model performance.

We can perform a backward search in sklearn using the recursive feature elimination method. Given a model that assigns weights to features (whether by virtue of coefficients in a linear regression model or feature importances in a decision tree model), we can iteratively prune features with low weights based on the assumption that the weights are a proxy for feature importance. This will continue until you've reached a specified number of features; alternatively, you could combine this technique with cross-validation using RFECV to automatically determine the ideal number of features.

Note: we could also leverage recursive feature elimination on a simple model as a filtering technique to reduce our feature-space before training on a more complex model.